My cab is at least as full of electronics as a luxury car. I have a media system, three satnavs (phone, vehicle and commercial TomTom – the latter the only one that I can trust as the truck's owner doesn't pay for the map updates), automatic gearbox, air suspension, radar, lane follower and the bloody driving assistance camera (about which more later). Even my steering wheel is 100% electronic; it's not connected to the wheels at all.

I'm far from a Luddite technophobe. I was an early adopter of personal computers (Amstrad), mobile phones (the size of a breeze block) and spreadsheets. I even ran a technology company and could programme a video recorder. Despite this I worry that sometimes the electronics in my cab are a liability. The lane assistance thingy (industry term) uses a camera to track the cat's eyes and white lines; if I stray it corrects my steering. In my first week either the white lines were dirty or an obese horsefly committed suicide and blocked the camera's view. I started having to deal with random steering inputs from the computers. It was easy enough to override them by steering against the computer’s push but it was very disconcerting.

Eventually I found the menu option to turn the lane follower off. (I have three or four main menus, many of which have long sub menus.) Then I started to worry about what other electronic gremlins and booby traps lurked in the truck’s systems. Nowadays I mostly manage not to wonder about what other algorithm might intrude into my relationship with the steering. Yes, I know it's all been tested and there are tens of thousands of trucks just like mine blah blah blah. But I still worry because one's truck trying to steer itself is memorable.

I survived that because I was in the loop, was paying attention and had an override. The system was designed to be advisory rather than expert. Its rule based, subject to override and its designers made no attempt to design it to be “intelligent.” Unlike those pursuing artificially intelligent control systems for cars (and everything else), they accepted the need for human intervention.

Expert Systems

True Artificial Intelligence (AI) (as opposed to marketing flim-flam) is where the computer programme develops its own rules based on its experience and, ideally, the experience of every other AI system. AI has also been referred to as genetic algorithms, as the code evolves with experience. Defining artificial intelligence is a whole academic field of its own. IBM, which knows a bit about computers, has published a concise introduction here.

The code improves itself from a sound base. In theory that means that any AI system is far more expert within its domain than any human could possibly be. That in turn means that it can do any job better than any human, or any team of humans. As a computer doesn't get tired, doesn't have a life outside work, is rational rather than emotional and has no employment rights it's a cheaper solution – or so the salesmen and enthusiasts will tell you.

So will politicians in charge of failed, underfinanced public services. Current political mutterings such as “AI drone swarms will destroy tanks” and “AI diagnostics will save the NHS” give the (false) impression of clear path to a better future and something sensible being done on their watch.

I'm far from convinced.

The starting set of rules inform the AI programme of what it is to become expert in, what “good” looks like and how to measure it. The programme runs, measures outcomes and adapts its code to deliver better outcomes more of the time. With more data and more and more feedback it should become more expert. (Whether one can be a little bit expert we’ll pass on for this article.) One of the most successful and widely known such programmes is Google translate, which has been going since 2006. It’s getting pretty good, but it’s far from perfect.

A friend is a medical interpreter for the NHS. She insists on only interpreting face to face as she can better read the meaning and comprehension (or lack of it) by reading non-verbal signals in addition to what is said. She has lost count of the number of times that a problem has not been diagnosed correctly due to the shortcomings of telephone translation and Google translate – which is sometimes used for clerical tasks. Of course, telephone interpreters are cheaper and Google translate is free, but medical misunderstandings can be catastrophic. Google translate is a fantastic tool for trivial stuff and it’s getting better. But it’s not an interpreter and never will be as it’s searching for semantic equivalence not true meaning. It can’t look the speaker in the eyes. (Nor can it hold new-born babies or perform CPR, as my friend has had to – humans are far more adaptable than a computer.)

Of course a technophile would add functionality with a camera and more AI software to read the emotions and meaning of facial expressions. Perhaps that would work, although it would also have to adjust for culture, ethnicity and of course the medical condition itself. It would need confidential medical data to stand a chance, and that presents a whole bunch of data issues. Even if they could be overcome, at what stage would the system be deemed good enough to be trusted? It seems to me that many “AI” solutions are selling vapourware. The frequent, expensive expansions of the programme consume cash that would otherwise be available to solve today’s problems today – perhaps by paying NHS interpreters travel expenses.

Rule Based Systems

AI systems are rule based systems that have rules to change their own rules to deliver better performance. The efficacy of any rule based system is determined entirely by the quality of the rules. If the fundamental ones in the AI are less than perfect (what isn’t?) then problems are likely.

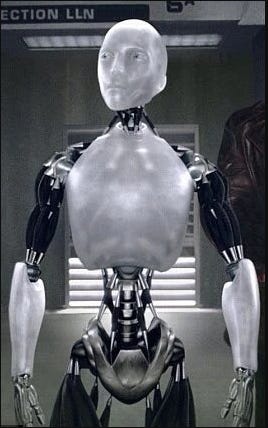

An excellent example are Isaac Asimov’s laws of robotics, which you may recall are:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

You might think that these were clear, tight and fool proof. You would be wrong, as he demonstrates repeatedly in “I Robot.” Even for a robot, life is more nuanced than simple laws. Take a trip to the Old Bailey and you’ll see that it’s even more complex for humans – there’s a reason for defence barristers. In 2022 over 20% of crown court cases, that is serious criminal ones, were “not guilty” verdicts.

The financial markets use rule based computers widely, it goes by the name of “algorithmic trading.” For all the theories and economics, markets are fundamentally ruled by greed and fear. The latter impairs the rationality of all humans, including city traders. Irrational people make poor decisions and fear can turn quickly to panic. When a stock (or bond) is falling in value and people’s wealth is disappearing before their eyes they might panic. Worse, they might dither, and in a rapidly moving market time really is money. Computer software can’t panic and is very fast, so many – if not most – trades are made by computers.

In the 2007 financial crash fast trading of ill-conceived securities spread the consequences of selling mortgages to people who could not possibly pay – a financial idiocy that none of the human experts worried about – across the entire banking sector. It turned out that the experts were idiots, despite their ivy league and Oxbridge education. David Vinier, the Chief Financial Officer of Goldman Sachs – and educated and highly experienced banker – infamously said “We were seeing things that were 25 standard deviation moves, several days in a row.” He was, rightly, ridiculed. (The probability of a single 25 standard deviation event is 0.00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000003% . The universe is only 5,000,00,000,000 days old. )

It's reasonable to assume that the CFO of a big bank is as close to a banking expert as you can get. Yet he clearly did not understand his “expert” systems. For a start, standard deviations are only accurate in “normal” distributions, which work perfectly for truly random events like rolling dice. While shoehorning data into a normal distribution can be very helpful in analysis, such a move is creating a model. A model is an approximation and an approximation, by definition, has errors. While the analysis of non-normal distributions is part of the exciting life of statisticians, and there are a plethora of other distributions available, they’re still models. Which means they still have errors.

Which means that any decision based on the statistical analysis of a wide range of data, whether by human or computer, has an inherent error. As computers work in binary, that is numbers, every input, computation and output must be a number.

In the financial markets this computers trade faster (and cheaper) than humans, but only within the constraints of the rules it has. If the human experts make a mess of the rules the computer will still implement them as it has no knowledge of a picture wider than that encompassed in the rules it has.

Of course, investment bank techies test rules against historic data and measure how well it would have worked. At best that only produces an expert system for yesterday. More likely it introduces more errors as trading is a bipartite exercise, I can only buy someone wants to sell ; if they sold a security yesterday they don’t have it to sell today.

That brings in game theory and an article for another day. Today’s conclusion is that experts are fallible, so expert systems are fallible. Unfortunately fallibility is not the only challenge faced by AI.

Energy

AI relies on data and that is stored and manipulated in data centres. The more data an AI programme can access the more quickly it will learn. An International Energy Agency report reckons that the world’s data centres used some 300 Terawatt hours of electricity last year. That’s 1% of the world’s annual consumption, about equivalent to the entire UK's annual electricity consumption. https://www.iea.org/energy-system/buildings/data-centres-and-data-transmission-networks. Since 1980 the world’s population has almost doubled but its electricity consumption has trebled. IBM released the first PC in 1981, since which time the world has been moving a lot of electrons about the place. How much value that’s created is an open question unless you’re an electricity generator.

In the UK the adoption of net zero has turned electricity into an expensive and alarmingly scarce resource. AI might not be as cheap as it was once thought and, should you care, increasing demand for electricity simply makes net zero even harder to achieve. The UK is already in a state where electricity companies sometimes must choose which customers get power and which don’t. Sure, data centres have back up generators, but there’s always the risk that they won’t start.

Bandwidth and Communications

It’s not just electricity. Moving data about the place needs a lot of electrons and photons. The network of cables, copper and fibre optic, is growing but finite. The same is true of mobile data and satellite data. That all comes down to money and physics.

Investment banks pay to put in their own fibre to their own data centres. But that’s not an option for mobile computers, as would be required in cars, trains and swarming drones. They’re all going to have to use part of the electromagnetic spectrum, radio waves. Data capacity relates to frequency – the higher the frequency the more data can be passed. Unfortunately higher frequencies are more line of sight that lower frequencies. The signal will be interrupted it there’s something between the transmitter and receiver. Whatever programme is waiting for that bit of data will have to wait and the user will have to hope that whoever wrote the software anticipated the problem.

Certainly one workaround is to use satellites as a clever constellation will always have line of sight to the ground (although not to people inside buildings). That may allow very high frequencies and thus lots of data but that moves the constraint to the capacity of the satellite system itself. Unless and until a massive advance in quantum computing comes along, there is finite capacity. That creates a market in which the highest bidder will win.

For the swarming drones and other military applications mobile communications introduce the challenges of electronic warfare. Electronic communications can be detected (if not decoded) by relatively simple equipment. That gives the detector the option of attempting to decode, triangulating to fix the location of the transmitter and then malleting it with some high explosive (or worse) or simply to jam it. GPS jamming is part of the war in Ukraine (as it was in the first Gulf War in 1990). So is jamming drones.

Conclusion

What’s the point? Sure, AI can do some things acceptably well – translation (not interpreting) and photo recognition. Chat GPT might even be able to knock out poetry no worse than most humans. It might even be able to drive a car in moderate traffic, although it hasn’t got there yet. In the simpler setting of aviation (less traffic and no pedestrians) basic computers (compared to AI ones) have been controlling matters safely and for years, albeit with human oversight. It’s unlikely that any computer would have pulled off the “miracle of the Hudson” as noone would have programmed it to. It wasn’t a miracle either – it was a highly trained human doing his job.

The camera in my cab protects me in the event of an accident by having a record of what happened inside and outside. I have no problem with that. Unfortunately it also offers advice in perhaps the most annoying voice that I’ve heard – think Edwina Curry. It has two lines. The first “Maintain safe distance!” comes out every time the distance between me and the truck ahead falls below two seconds. The most common cause (by far) of this is some muppet cutting me up as they have almost missed their exit. Having Edwina stating the obvious while I manoeuvrer, brake and check my mirrors is distracting. Her second comment is “Distracted Driving!” None of the 30 drivers in our yard has yet worked out what causes that, nor what it means. I’ve received it when taking a long look in a mirror but not when picking up a dropped sandwich, when looking for an entrance but not when reprogramming the satnav on the move and when scanning my mirrors but not when struggling to stay awake. I suspect that it’s there to gather data on how drivers scan their mirrors and what triggers drivers to act to populate a database for the development of some AI driving tool (it’s hooked into the mobile internet via my tachograph).

Until a computer can be developed that understands everything, including parameters that are way outside the task at hand, they will always require human oversight. (If that day comes we’ll need much wiser programmers and law makers than we have ever seen before). For futurologists to persuade health secretaries, journalists and public servants that AI is the answer to all their problems is more a restatement of the attraction of snake oil than a technological prediction.

It’s certainly no basis for government policy. But that never stopped Westminster or Whitehall.

Dear Patrick, thank you for a wonderfully engaging article.

The whole arena of advanced driver assistance systems (ADAS) is evolving rapidly as vehicle manufacturers seek to deliver SAE level 2 to 3 assistance, with the aim of full autonomy (level 5) which, as you say, remains very elusive.

I suggest the human machine interface does not, on the whole, work well since to get the best from ADAS the driver has to be fully attentive. Driver monitor systems have been around for more than a decade, and while camera resolution / image processing / analysis have been transformed, the results are not really robust.

The vehicle manufacturers have sought to automate product development - much of this is positive, but tends towards interpolation of 'library' references - which crushes genuine product innovation. Rather, it encourages an endless parade of wafer thin improvements.

AI is a tool. Not the master.